AI’s hardware bottleneck

Almost one year into the most current AI hype cycle and one thing has become abundantly clear: deep learning (DL) applications, such as large language models (LLMs), require massive amounts of compute & energy to train and query. Even when combining hundreds of GPUs to process data in parallel, the interconnectors between GPUs aren’t fast enough to keep up with the complex applications. For AI to reach its full potential, a complete architectural overhaul is needed.

State of computing today

Modern-day computers use von Neumann architecture (developed by John von Neumann roughly 80 years ago), which assumes that every computation pulls data from memory, processes it, and then sends it back to memory. Although the design has been upgraded & improved upon over the years, the architecture is quickly running into its physical limitations, specifically the end of both Moore’s law and Dennard scaling.

(semiengineering.com)

Moore’s Law

Moore’s law, named after John Moore’s (co-founder of Fairchild Semiconductor and Intel) 1965 prediction that computer chip transistors will double every year (subsequently changed to “every two years” in 1975), has held remarkedly true over the past 50 years. The more transistors on a chip, the more computing power. As chip makers innovated design, materials, and manufacturing processes over the years, they’ve been able to keep the prophecy alive.

However, there are physical limits to Moore’s law. As transistors approach the size of a single atom, their functionality begins to get compromised due to the particular behavior of electrons at that scale. We’re arguably already at, or very close to, that limit today.

Dennard Scaling

Dennard scaling, based on a 1974 paper by Robert H Dennard, refers to a scaling law that states as transistors get smaller, their power density stays constant, so that the power use stays in proportion with area. i.e. as transistor density doubles every two years, power consumptions remains the same. Coupled with Moore’s law, performance per joule (energy efficiency) doubled every 18 months. However, similar to Moore’s law, Dennard scaling is also facing its physical limitations.

(nextplatform.com)

The physics that drove this trend broke done somewhere between 2005 and 2007, when transistors became so small that increased current leakage caused to chips to overheat, which effectively prevented further efficiency gains.

Computing at a crossroads

On one hand, we’re experiencing an explosion in AI research and development, requiring exponentially more compute processing power. On the other, we’re quickly approaching the theoretical limits of modern-day hardware systems. In order to materially scale AI (both in capacity & distribution), we need a new computing architecture.

All eyes on quantum

Even if you don’t know what it is, you’ve most likely heard of quantum computing: massively powerful data processing technology that leverages the properties of quantum physics. While it sounds like science fiction, its close to becoming a reality within the next decade or so. Although the technology has been in development since the 1980s, its gotten more attention of late, in part because of the generative AI hype over the past nine months.

Is all the attention warranted though?

(global google trends: “quantum computing”)

How it works

Instead of processing binary data (bits) like conventional computers, quantum computers process data in quantum bits (qubits), which can exist in multiple states simultaneously (enabling much more complex data logic). Using special algorithms that benefit from the unique characteristics of qubits, quantum computers can perform massive data processing (e.g. cryptography, simulations, optimization) significantly faster than legacy systems.

Quantum limitations

In order for quantum computers to accurately process data, qubits must stay in superposition (exist in multiple states simultaneously) which requires atoms to slow down to near stillness (near absolute zero; -273 °C). Quantum processors must also be under zero atmospheric pressure and completely insulated from the earth’s magnetic field. If not, the machines will process errors which are not easily remediated (since qubits can take an infinite number of states). It has therefore been incredibly difficult to scale quantum computers while maintaining high-levels of coherence and low error rates.

Current state and outlook

Given the complexities of manipulating quantum physics, quantum computers are still likely 10+ years away from adoption (20-30 years away from more complicated applications) — so its recent hype/attention is likely premature and overblown.

Yole Intelligence, a leading quantum research group, predicts the market will only be $2B by 2030 — insignificant vs. the expected $2.5T global computing market (Fortune Business Insights).

Fortunately there’s another next-generation computing paradigm that’s much closer to reality…

"Quantum computing is a technology that, at least by name, we have become very accustomed to hearing about and is always mentioned as 'the future of computing'. Neuromorphic computing is, in my mind, the most viable alternative [to classical computing]. If a neuromorphic processor were to be developed and implemented in a GPU, the amount of processing power would surpass any of the existing products with just a fraction of the energy."

-Carlos Andrés Trasviña Moreno, Software Engineering Coordinator at CETYS Ensenada

Neuromorphic computing: artificial brains

Never heard of neuromorphic computing? You’re not alone — the technology has gone relatively unnoticed vs. quantum over the past decade+, which is ironic considering it offers a much more effective solution for scaling AI (especially for deep learning applications) and is close to production-ready.

(global google trends: “quantum computing” in blue, “neuromorphic computing” in red)

How it works

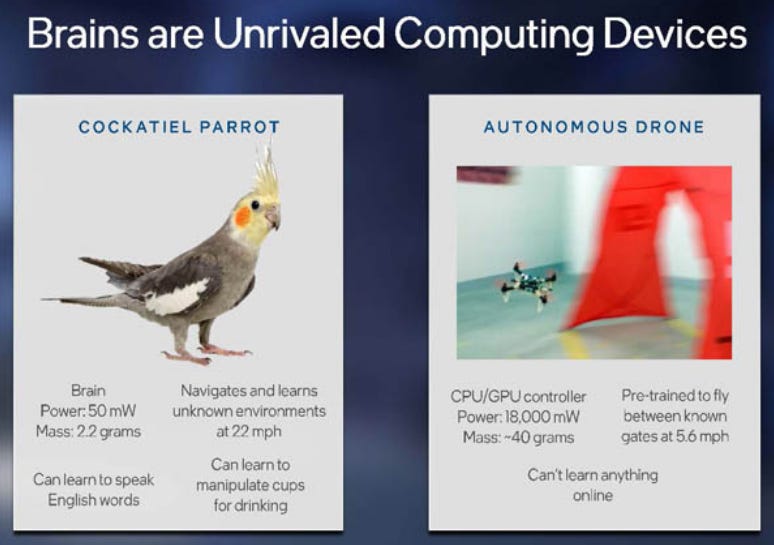

This technology attempts to imitate the way a human brain works. Why? Animal brains are much more energy efficient than any modern-day computer. The world’s fastest supercomputer requires 21 million watts of power. Our brains, in comparison, use a mere 20 watts — roughly the energy needed for a light bulb.

(intel marketing materials)

Neuromorphic hardware and software elements are wired to mimic the nervous and cerebral systems in order to learn, retain information, search for new information, and make logical deductions like a human brain does. They rely on artificial neurons to receive input signals from other neurons, process that information, and then send the output to other neurons. By connecting large numbers of these artificial neurons, these systems can simulate the complex patterns of activity that occur in the human brain.

Superior capabilities: flexible and adaptable

-Dr. Van Rijmenam, leading technologist & futurist (link)

Superior distribution: energy efficient and resilient to noise and errors

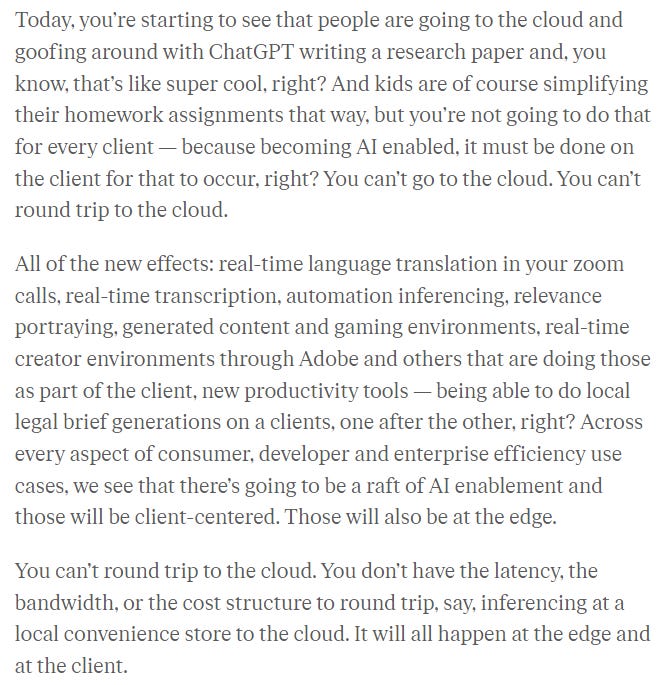

Neuromorphic computers don’t have the same physical limitations as quantum — they can operate on traditional hardware (GPUs) at room temperature, with almost no limit to the number of bits being calculated. Moreover, unlike quantum computers, they’re highly energy efficient and resilient to noise & errors, which makes them perfect for scaling deep learning applications, especially at the edge/client. Why is this important for AI?

Intel (leading innovator in neuromorphic development) CEO Pat Gelsinger (Q2 2023 earnings call:

For AI to be ubiquitous and valuable — it must have low latency. This can only be done at the edge/client level, using neuromorphic technology.

Current state and outlook

The human brain is highly complex and not completely understood. While neuromorphic hardware advancements have been exciting, there is still much work to be done on the software to effectively leverage the brain-like architecture. That being said, according to Grand View Research, the neuromorphic market was $4.2B in 2022 (6x larger than quantum!), and estimated to reach $20B by 2030 (link), 10x larger than quantum’s estimate!

So what does this have to do with crypto and web3?

Web3 is simply the next generation of data networking. You must have a medium/long-term view on hardware evolution (especially paradigm shifts!) to have an informed view on how networking infrastructure will evolve. The systems are highly dependent on each other.

Moreover, there are also direct investment opportunities into decentralized neuromorphic networks such as Dynex (DNX). As the technology becomes more production ready, I have no doubt many more will pop up (like were seeing today with AI-specific decentralized GPU networks).

To be clear

I still believe quantum will be a paradigm shift in computing, specifically for data processing-intense applications like cryptography and simulations. But when it comes to the distinct needs of deep learning, I believe neuromorphic computing will be superior — and should therefore be getting much more attention today.

Disclosure: Omnichain Capital has no position in DNX but may in the future.

Cover photo: Generated by DALL-E 2