Disclosure: Omnichain Capital may have positions in tokens mentioned in this report.

Revisiting money legos

The term “money legos” became popular during the DeFi summer of 2020, when application composability drove an explosion in DeFi utility and adoption. Because each financial primitive (stablecoins, lending, trading, yield farming, etc.) was permissionless and trustless, they could all seamlessly work together - like legos. Instead of continuously creating applications from the ground up, developers could simply build on existing DeFi infrastructure, driving fintech innovation at an unprecedented pace. As a result, DeFi usage grew exponentially.

This financial renaissance was possible because the opensource code (DeFi applications) and blockspace compute (Ethereum) were trust-minimized, and therefore fully interoperable. However, there are two key issues preventing more impactful crypto innovation:

1) Limited compute power: Blockchains are ledgers of ownership and are therefore optimized for security and transaction throughput — not necessarily complex compute capabilities. Its no secret that most DeFi application front-ends are hosted off-chain on non-composable centralized servers. Therefore, composability to-date has been limited to low compute interactions.

2) Downside to open-source code: As previously mentioned, open-source software accelerates innovation; however, it can also cause perverse incentives. The latest bull market revealed developers (and users) are mainly incentivized to optimize token value vs. application utility. To game this, developers forked application code (with very slight alterations) to quickly create applications users understood — even if the product itself wasn’t very innovative. Application utility was then mostly driven by users looking to acquire tokens (there are exceptions…but not many) vs. the incremental utility of the application. This created a highly reflexive economy that ultimately crashed in spectacular fashion.

Both limited compute and perverse incentives have stifled crypto’s progress of late. Fortunately, I believe the next wave of crypto innovation will soon be unlocked with the rise of composable cloud services.

Enter compute legos

Composable cloud services (aka trust-minimized/decentralized compute) are peer-to-peer networks for compute, memory, storage, and all related services on-top (akin to centralized counterparts: Amazon Web Services, Microsoft Azure, Google Cloud). However, the trust-minimized nature of these networks enables full composability, similar to the financial primitives in DeFi. Instead of “money legos”, composable cloud services enable “compute legos,” which have two major implications that address the issues mentioned above:

1) Composability is no longer confined to blockchain compute: Trust-minimized compute projects are essentially oracles that connect blockchains to off-chain compute. This opens up a massive amount of compute power, since each node can incrementally contribute to the network’s capacity (vs blockchain nodes that must all process the same state). The result is composability with (theoretically) unlimited compute capacity.

2) Optimal developer incentives: In addition to compute, composable cloud service providers can offer a marketplace for trust-minimized applications/microservices, enabling developers to monetize their code based on usage. Other developers can still fork this code, but unless they add incremental value to the stack, they will not benefit from its usage. This incentivizes developers to publish the highest quality code to drive the most utility for consumers. Software is on the fringe of a massive renaissance.

Interestingly, most of the projects below instead market themselves on the more tangible advantages of trust-minimized compute. In theory, compared to centralized peers, trust-minimized cloud providers are cheaper, have less latency, have more reliable uptime, and are more secure. While all these features are good selling points, I think they will pale in comparison to the explosion of innovation that compute legos will drive — its just harder to articulate today the new capabilities that will emerge from this technology.

“We believe that freeing applications from platforms will catalyze an innovation wave from the global developer community. Humanity will benefit from a new world of feature rich services that are hard for us even to imagine.”

-Fluence Project Manifesto (link)

Today’s competitive landscape:

Consumer-focused compute supply:

These projects were some of the earliest entrants into the space, and have mostly focused on supplying network compute from consumer hardware (desktops, laptops, raspberry pi, etc.). While Render focuses exclusively on GPU rendering, Golem and iExec offer more general purpose compute.

Datacenter-focused compute supply:

The next cohort of projects have taken a slightly different approach, sourcing compute power from independent datacenters rather than just consumers. Although this may initially hinder network decentralization, it should provide a much more powerful compute solution at maturity.

Fungible compute:

Instead of running a trust-minimized compute marketplace, these projects provide one large fungible operating system. This helps facilitate a more continuous service, rather than task-based compute solutions.

Compute + blockspace:

While the previous networks can be considered layer 2s (oracles to off-chain compute), the final two projects are layer 1s that combine smart contract blockspace with cloud computing capabilities. Store leverages off-chain compute while Internet Computer integrates it directly on-chain.

Adoption to-date

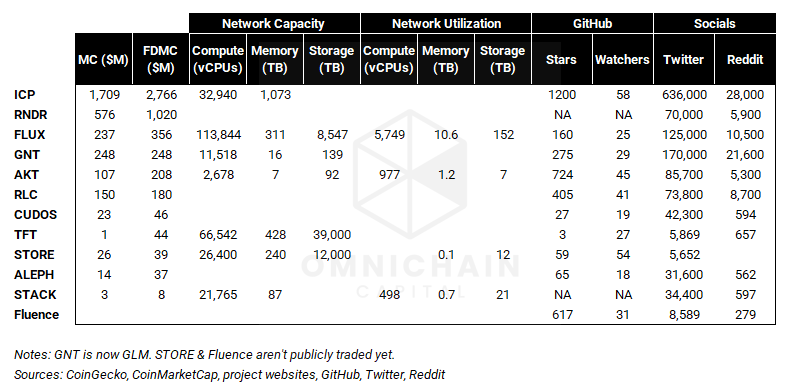

The sector is still early days — many of the projects have yet to meaningfully launch their mainnet. Putting the numbers below into context, in 2014 it was estimated that AWS had between 1.4-5.6 million servers of capacity. Servers can hold between ~50-250 cores (vCPUs), illustrating the orders of magnitude difference between AWS’s scale in 2014 and trust-minimized compute today (only 250k total vCPUs).

Like all disruptive tech, composable cloud services will start with niche use-cases, then move up market as its utility ultimately surpasses legacy software. While this scenario is likely 5-10 years away from really taking off, meaningful experimentation has already started today. Adoption will happen gradually — then suddenly — so the time to pay attention is now.