Disclosure: M31 Capital has positions in several tokens mentioned below.

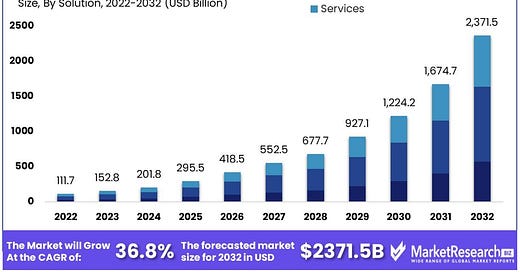

In just over a year since ChatGPT’s debut release, generative AI has arguably become the most influential global narrative today. OpenAI’s early success drove a surge in investor interest for large language models (LLMs) and AI applications, attracting $25B in funding in 2023 (up 5x YoY!), in pursuit of the potential multi-trillion-dollar market opportunity.

As I’ve previously written, AI and crypto technologies complement each other well, so it’s not surprising to see a growing AI ecosystem emerging within web3. Despite all the attention, I’ve noticed a lot of confusion about what these protocols do, what’s hype vs. real, and how they all fit together. This post will map out the web3 AI supply chain, define each layer in the tech stack, and explore the various competitive landscapes. By the end you should have a basic understanding of how the ecosystem works and what to look out for next.

Web3’s AI tech stack

Infrastructure Layer

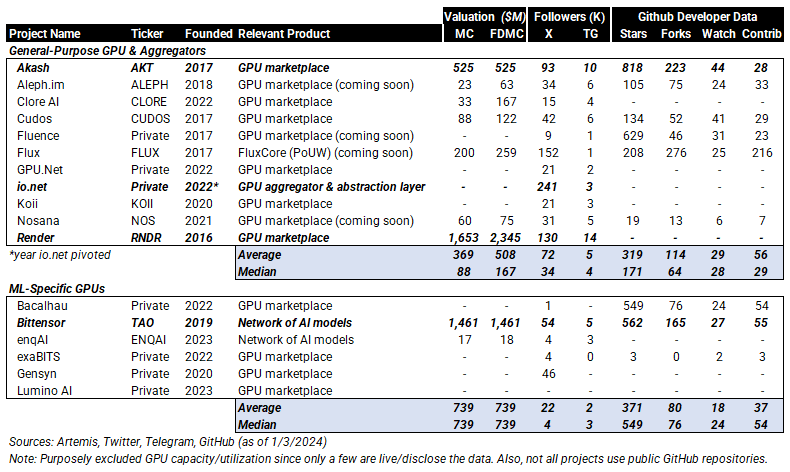

Generative AI is powered by LLMs, which run on high-performance GPUs. LLMs have three main workloads: training (model creation), fine-tuning (sector/topic specialization), and inference (running the model). I’ve segmented this layer into general-purpose GPU, ML-specific GPU, and GPU aggregators, which are characterized by their different workload capabilities and use-cases. These P2P marketplaces are crypto-incentivized to ensure secure decentralization, but it’s important to note the actual GPU processing occurs off-chain.

General-purpose GPU: Crypto-incentivized (decentralized) marketplaces for GPU computing power which can be used for any application. Given its general-purpose nature, this computing resource is best suited for model inference only (the most used LLM workload). Early category leaders include Akash and Render, but with many new entrants emerging, it’s unclear how protocol differentiation will play out. Although compute is technically a commodity, web3 demand for permissionless, GPU-specific compute should continue to grow exponentially over the next decade+ as we integrate AI more into our daily lives. Key long-term differentiators will be distribution and network effects.

ML-specific GPU: These marketplaces are more specific to machine learning (ML) applications and can therefore be used for model training, fine-tuning, and inference. Unlike general-purpose marketplaces, these protocols can better differentiate through the overlay of ML-specific software, but distribution and network effects will also be key. Bittensor has an early lead, but many projects are launching soon.

GPU Aggregators: These marketplaces aggregate GPU supply from the previous two categories, abstract away networking orchestration, and overlay with ML-specific software. They are like web2 VARs (valued-added resellers) and can be thought of as product distributors. These protocols offer more complete GPU solutions that can run model training, fine-tuning, and inference. Io.net is the first protocol to emerge in the category, but I expect more competitors to emerge given the need for more consolidated GPU distribution.

Middleware Layer

The previous layer enables permissionless access to GPUs, but middleware is needed to connect this computing resource to the blockchain in a trust-minimized manner (i.e., for seamless use by smart contracts). Enter zero-knowledge proofs (ZKPs), a cryptographic method by which one party (prover) can prove to another party (verifier) that a given statement is true, while avoiding conveying to the verifier any information beyond the mere fact of the statement’s truth. In our case, the “statement” is the LLM’s output given specific input.

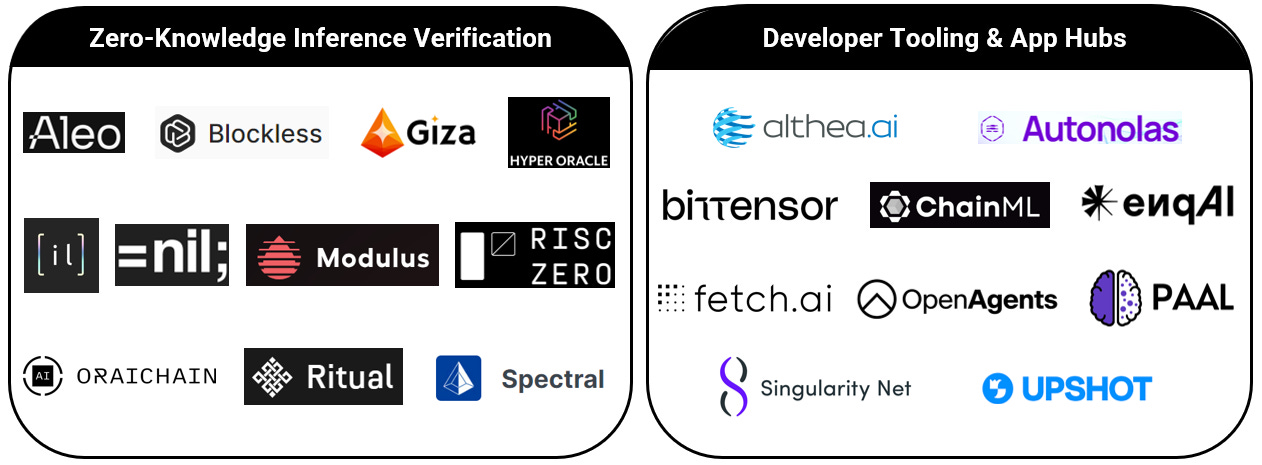

Zero-Knowledge (ZK) Inference Verification: Decentralized marketplaces for ZKP verifiers to bid on the opportunity to verify (for compensation) that inference outputs are accurately produced by the desired LLM (while keeping the data and model parameters private). Although ZK technology has come a long way, ZK for machine-learning (zkML) is still early days and must get cheaper and faster to be practical. When it does, it has the potential to dramatically open the web3 & AI design space, enabling novel capabilities and use-cases. Although still early, =nil;, Giza, and RISC Zero lead developer activity on GitHub. Protocols like Blockless are well positioned whichever ZKP providers win since they act more as aggregation & abstraction layers (ZKP distribution).

Developer Tooling & Application Hubs: In addition to ZKPs, web3 developers require tooling, software development kits (SDKs) and services to efficiently build applications like AI agents (software entities that carry out operations on behalf of a user or another program with some degree of autonomy, employing representation of the user's goals) and AI-powered automated trading strategies. Many of these protocols also double as application hubs, where users can directly access finished applications that were built on their platforms (application distribution). Early leaders include Bittensor, which currently hosts 32 different “subnets” (AI applications), and Fetch.ai, which offers a full-service platform for developing enterprise-grade AI agents.

Application Layer

And finally, at the top of the tech stack, we have user-interfacing applications that leverage web3’s permissionless AI processing power (enabled by the previous two layers) to complete specific tasks for a variety of use-cases. Again, this portion of the market is still nascent, but early examples include smart contract auditing, blockchain-specific chatbots, metaverse gaming, image generation, and trading & risk-management platforms. As the underlying infrastructure continues to advance, and ZKPs mature, next-gen AI applications will emerge with functionality that’s difficult to imagine today. It’s unclear if early entrants will be able to keep up or if new leaders will emerge in 2024 and beyond.

Investor outlook: While I’m bullish on the whole AI tech stack, I generally believe infrastructure & middleware protocols are better bets today given the uncertainty in how AI functionality will evolve over time. However it does evolve, web3 AI applications will no doubt require massive GPU power, ZKP technology, and developer tooling & services (i.e. infrastructure & middleware).