Disclosure: Omnichain Capital may have positions in tokens mentioned in this report.

Background: Bandwidth Optimization

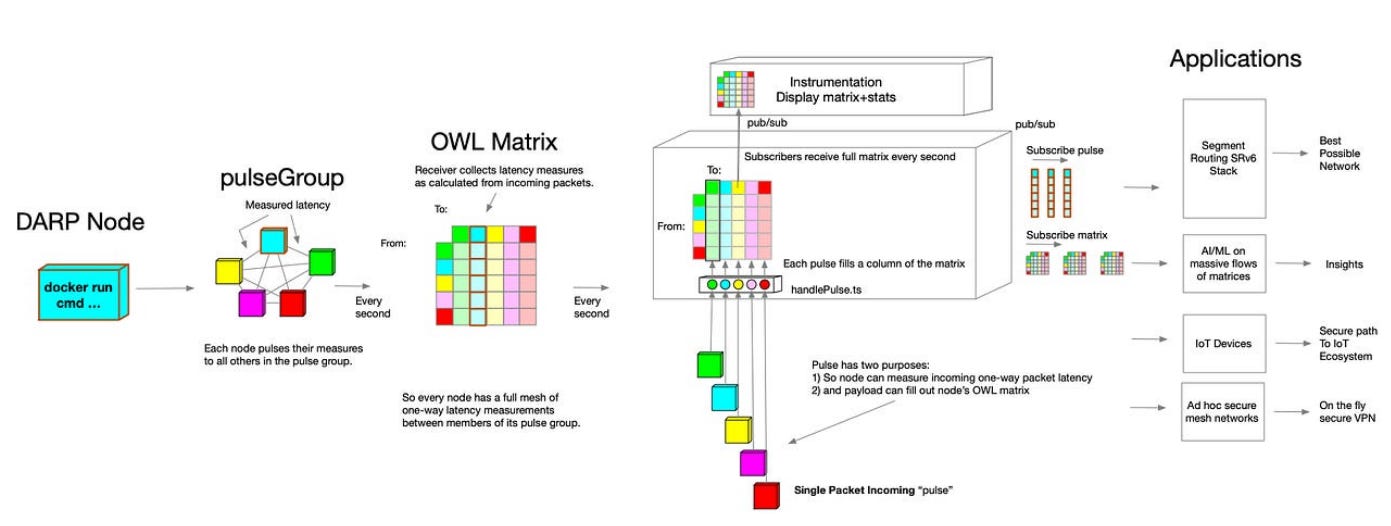

Syntropy (NOIA) was founded in 2017 with an initial vision of optimizing internet connectivity through a blockchain-based data orchestration network. The project’s patented DARP (Distributed Autonomous Routing Protocol) technology powers decentralized systems that dynamically route data packets along better performing paths, minimizing network latency.

Its first product, Syntropy Stack (launched January 2021), leverages DARP specifically for internet connectivity, promising clients improved bandwidth reliability, performance, security, and resource utilization. The go-to-market strategy focused primarily on web2 customers (mainstream market), but since blockchain tech has yet to meaningfully cross the chasm (explained below), sales cycles were too long and network adoption stagnated.

(Crossing the Chasm, Geoffrey A. Moore)

(wikipedia dot com)

After 2 years of suboptimal growth, Syntropy leadership started reconsidering its strategy in the fall of 2022. Although its Stack product struggled to find mainstream traction, the core DARP technology worked great, improving latency by an average of 29 ms (up to 1,000 ms!). What if the protocol could be repurposed for the web3 market (innovators and early adopters) instead?

On June 1st, 2023, a week after closing a $4M private fundraise led by Alpha Transform Group (link), Syntropy announced its intention to pivot to decentralized on-chain data management via blog post (link) and AMA (link).

Why on-chain data management?

The web3 end-market is much easier for blockchain projects to sell into (vs. web2 end-market)

Vital segment of the web3 tech stack with enormous long-term TAM

Solving the data availability problem (next section) is the key to long-term blockchain scaling & interoperability, and would therefore be a highly coveted and valuable asset

Current solutions have a lot of issues (cost, performance, centralization, etc.)

Uniquely positioned to disrupt market given core competencies (latency minimization & network optimization)

“The blockchain data sector is rapidly expanding, with organizations resorting to centralized solutions such as Alchemy, and investing millions in infrastructure to engage with data. Indeed, blockchain data has emerged as a highly coveted resource in today's digital landscape. However, the industry faces a critical challenge - the issue of Web3 scalability that must be addressed to accommodate its exponential growth.” (Syntropy Press Release)

Understanding The Data Availability (DA) Problem

For those not familiar with DA I highly recommend reading WTF is Data Availability, by Yuan Han Li. Its a quick and easy explanation of the topic that non-technical readers will be able to understand.

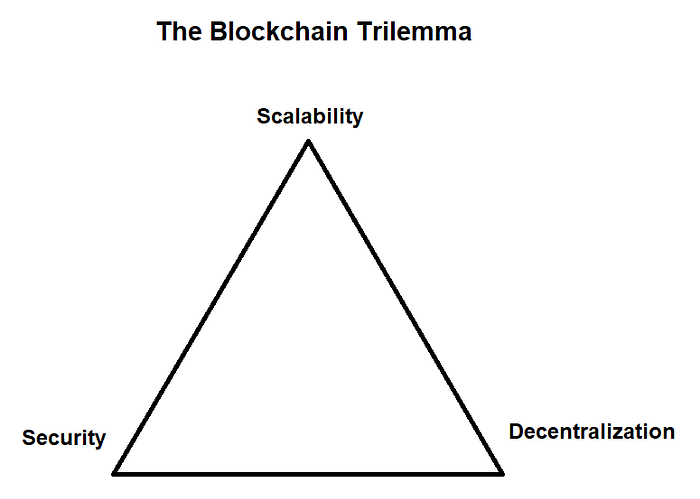

In short, data must be “made available” by block producers (miners/validators) so full nodes can download the transaction data, check their work, and keep them honest. The DA problem is blockchains don’t “force” nodes to download this data since it would massively increase the hardware requirements of full nodes, diminishing network decentralization and security. So how do we ensure DA as networks scale? This is the crux of the blockchain trilemma…

The DA problem is not limited to monolithic chains — modular architectures arguably depend more on DA. Resolving this issue is key for future next-gen scaling and interoperability/automation solutions like rollups, state proofs, account abstraction, and intents. These solutions are vital for upgrading web3 UI/UX, underscoring the profound importance of DA.

The Solution? Data Availability Sampling (DAS)

Here’s a great thread by Nick White of Celestia that explains DAS like you’re five (unfortunately you can no longer embed tweets into substack; link to tweet thread below picture)

TL;DR: DAS allows light nodes to verify DA without downloading all block data by conducting multiple rounds of random sampling for small portions of block data. The more rounds completed, the more confidence the light node will have that data is available. This solution ensures DA without sacrificing decentralization — but does it scale? No, blockchains must also scale DA throughput.

New solutions coming to market (Celestia, Polygon Avail, EigenDA) aim to scale data space for DA by creating app-chains that do nothing besides DA services. The problem, as explained below by polynya, is economic sustainability of these networks.

Other On-Chain Data Management Issues

Public blockchains claim to be permissionless, allowing anyone to access and use the network. While technically true, in practice its a lot more nuanced. In order to interact with the blockchain (run a dApp), monitor real-time data, and/or analyze historical data, one must run a full node. However, nodes are increasingly expensive and complicated to manage, limiting the access to these supposedly open and public networks.

Solutions to-date have primarily been third-party infrastructure providers that run nodes on your behalf — but unfortunately most are centralized, expensive, slow, and don’t scale very well, hampering dApp development and user adoption. Coupled with the DA problem, on-chain data management is ripe for disruption.

Now back to Syntropy…

The New Vision: Syntropy Data Availability Layer

Syntropy’s DARP technology will be repurposed to optimize blockchain data traffic instead of web2 internet traffic. The ultimate vision is a multi-chain data availability layer that offers a decentralized and scalable means to access, retrieve, and interact with real-time and historical on-chain data.

The platform will have three main functions:

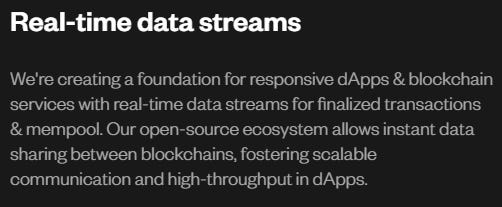

Real-time on-chain data streams: Publisher nodes push on-chain data to the network, broker nodes manage the access and availability of the data, and subscribers, as the end consumers, integrate the on-chain data into their dApps, analytics platforms, or algorithms, through SDKs.

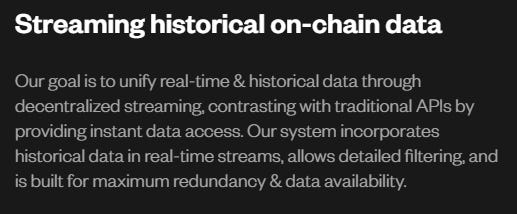

Historical on-chain data streams: Bridges the gap between real-time and historical data, unlocking potential for a host of applications, including data availability and indexing solutions, among others.

Data availability: Nodes can disseminate both transactional and availability proof data, substantially bolstering blockchain throughput. This development is pivotal for constructing efficient light clients and high-performance rollup solutions, applicable across any blockchain where this model fits.

Big picture: Syntropy is building the underlying data infrastructure for a wide-range of next-gen web3 functionality:

On-Chain Data Management Landscape

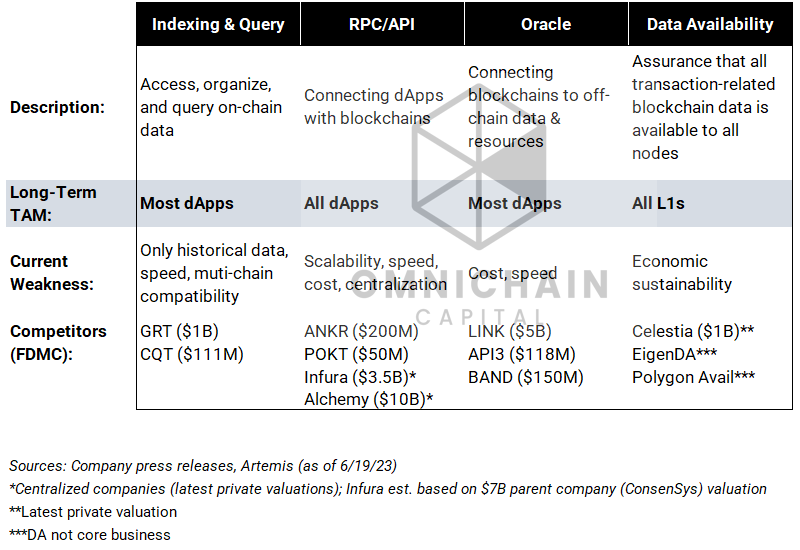

On-chain data management is an essential part of web3 infrastructure that enables blockchains to interact with dApps, other blockchains, and/or the off-chain “real world”. Its a key building block for all blockchains and dApps across the entire web3 ecosystem. As such, its long-term TAM opportunity is massive. Syntropy believes its new platform will compete in parts of all four categories below:

Syntropy Key Differentiation:

Instead of running outsourced infrastructure for clients, Syntropy incentivizes existing nodes to stream both real-time & historical data. Coupled with their DARP networking optimization technology, Syntropy believes it can deliver streams with much lower latency, lower costs, and higher scalability, while sustaining network decentralization. Moreover, since Syntropy will have multiple sources of network fees, its DA layer has a better chance of economic sustainability vs. the DA app-chains mentioned previously.

Early Validation & Next Steps

Syntropy recently ran successful benchmarking tests against other data streaming providers on both Aptos (link) & Ethereum (link)

Next Steps:

Fully operational MVP product for real-time on-chain data streams by year-end

Comprehensive roadmap release end of Q2 / early Q3

Incremental educational content to be released as year progresses

TL;DR:

Syntropy is an underfollowed small cap protocol ($30M FDMC) whos recent pivot to on-chain data management went mostly unnoticed

On-chain data management is a massively important & valuable segment of web3, however, its fraught with issues today

Syntropy believes its proprietary networking optimization technology and unique approach to on-chain data streams can potentially disrupt market incumbents

Early benchmarking tests suggest they may be correct

Photo cred: HBO’s Silicon Valley