Why Autonomous AI Needs Decentralized Data Access

plus a look inside SQD Network's best-in-class solution

By 2030, autonomous AI agents are positioned to become the default execution layer for both knowledge work and digital services, carving out a direct market well above $50b and acting as the key conduit for trillions in generative-AI productivity gains.

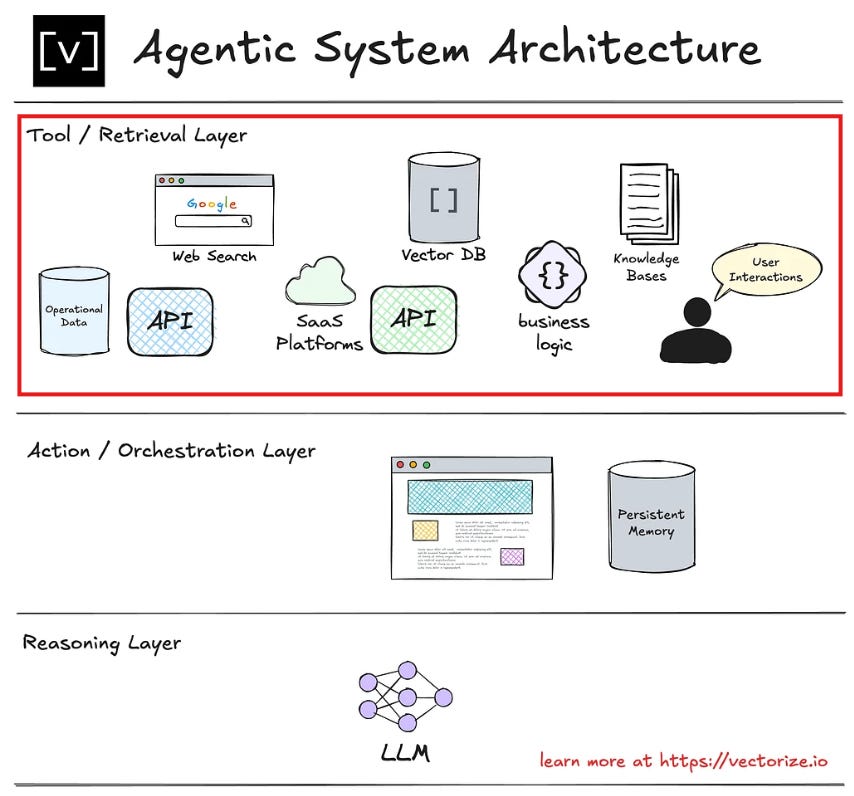

Autonomous AI systems, whether they trade assets, curate content, or orchestrate global supply chains, operate only as well as the information they ingest. If that information is locked behind proprietary APIs or controlled by single entities, agents are throttled on four fronts: availability, integrity, cost, and scope.

Open, decentralized data rails remove these chokepoints by giving agents on-demand, verifiable access to a continuously expanding pool of knowledge. This deep dive explains why that matters, how decentralization delivers, and where the benefits show up in practice, spotlighting SQD Network as today’s most promising solution.

Limitations to Centralized Data Access

As the US AI Safety Institute notes, the issue with current AI agent systems is the integration of trusted internal instructions and untrusted external data:

AI agent hijacking arises when a system lacks a clear separation between trusted internal instructions and untrusted external data, and is therefore vulnerable to attacks in which hackers provide data containing malicious instructions designed to trick the system.

More specific risks include:

Single Point of Failure: If the server or service goes down, or if access is revoked, the AI agent is left blind. For instance, a trading algorithm drawing prices from one exchange’s API could be frozen out during a critical market move if that API fails.

Censorship and Control: A restricted data source can selectively censor or prioritize information, injecting bias or preventing the AI from seeing the full picture. This may be intentional (a platform choosing what data to expose) or incidental (regional restrictions, paywalls, etc.). A content moderation AI, for example, might be less effective if it only receives data that a platform owner allows, possibly hiding posts that reveal bias in the AI’s decisions.

Lack of Coverage (Data Silos): AI often needs to synthesize data from many sources. Centralized systems rarely cover everything. An AI assistant answering questions might need web search data, news, social media, and proprietary databases. If each is controlled by different gatekeepers (Google, Twitter, etc.), the agent might be denied some sources or face high costs to access them. Siloed data leads to blind spots in AI understanding.

Unreliable or Manipulable Data: When only a few providers supply data, any mistake or manipulation on their end directly corrupts the AI’s inputs. In finance, there have been instances of oracle manipulation: if an AI trading bot has a single-source price feed, an attacker could feed it false prices to trigger bad trades. Data tampering could mean skewed model training or faulty outputs, with potentially catastrophic results (imagine an AI medical diagnosis tool given manipulated patient data).

Decentralization Holds the Key

Decentralized data infrastructure refers to a distributed network of nodes and protocols that collect, store, and serve data without a central authority. Such infrastructure is permissionless (anyone can join, contribute, or query data) and trustless (integrity is maintained via cryptographic consensus and economic incentives, not a single “trusted” server). This kind of infrastructure is an ideal match for autonomous AI agents.

Scalability: Decentralized data networks scale by adding additional nodes and participants, rather than scaling up a single server farm. This horizontal scaling means as data demand grows (more AI agents, more complex queries), the network can flexibly grow with it. Traditional centralized solutions struggle with this multi-source scale, as they’d need to consolidate heterogeneous data in one place (a huge engineering lift and a potential bottleneck). In open architectures, each new blockchain or data stream can be picked up by nodes without asking for permission, allowing the data pool to expand organically. This not only serves current needs but future-proofs AI systems: whatever new data source or blockchain comes online, a permissionless network can start indexing it, and AI agents can immediately tap in. The result is virtually unlimited data scalability, a crucial advantage as we foresee AI models consuming orders of magnitude more data in the coming years.

Reliability and Fault Tolerance: With no central server, decentralized networks achieve reliability through redundancy and distribution. Nodes are often geographically dispersed and independently operated; the network is robust to any single point of failure (be it a technical outage, a cyber attack, or even a regulatory action in one jurisdiction). For AI, this means the data feed is always on. Even if some nodes fail, others ensure continuity. Decentralized networks also tend to have built-in recovery and self-healing: if one node’s data is out of sync, consensus can correct it. From an AI operations standpoint, this level of reliability far exceeds a typical single-API SLA. It’s closer to the reliability of the Internet itself. Additionally, because no one entity can shut it down, an AI agent connected to a truly decentralized data network cannot be deplatformed or cut off from information due to external decisions. This is vital for AI in mission-critical roles (imagine an autonomous emergency response system that must remain informed during a disaster - it can’t afford a data outage because a central server room got flooded).

Auditability and Transparency: Trustless data systems create immutable logs and audit trails. Every piece of data can be traced, and in many cases, the process by which data was generated or processed is transparent. This is a stark contrast to black-box data services where data might be altered or removed without anyone knowing. For AI agents making consequential decisions, the ability to audit the data pipeline is key. It enables explainable AI in a new way: not only can we inspect an AI model’s internal reasoning, but we can also verify the external facts it used. For example, if an AI-driven investment fund makes a trade, one can later audit that the price feed at that exact moment was authentic (perhaps a hash on a blockchain confirms the feed’s state) and that the decision followed from real data, not a corrupted feed. One concrete approach is the use of cryptographic proofs. Decentralized data platforms can generate verifiable proofs of SQL queries, meaning an AI could query a large dataset and get back both the answer and a proof that this answer was derived from a certain trusted dataset without modification. This kind of end-to-end auditability is only possible with trustless design. It builds confidence for investors, regulators, and users that AI decisions are based on facts, not faulty or biased data. In domains like content moderation or legal AI, such transparency could even be demanded: proving an AI’s decision was based on an auditable trail of data can resolve disputes.

Autonomy and Self-Sufficiency: As noted, an AI agent’s autonomy is limited if it must continually seek permission to access data or if it relies on intermediaries. Permissionless data architectures remove those shackles. An AI agent can directly interact with a blockchain network (via an RPC node or an indexer) without asking anyone’s consent. It can consume data from a decentralized pub/sub service or file storage by just addressing the content (using content IDs, for instance) with no login required. This makes the AI a first-class citizen of the data ecosystem, rather than a client of a corporate API. The strategic benefit is that the AI can evolve and operate on its own schedule. If it needs to analyze a new data source at midnight, it can just start pulling from the network. If it wants to share some of its results, it can publish them to the network for others. In a sense, permissionless data networks give AI agents agency in the information space, allowing them to participate autonomously in the decentralized data economy. This is especially powerful in multi-agent systems: autonomous AIs could coordinate by writing to and reading from a blockchain (their shared source of truth) without any central orchestrator.

AI Use Cases Empowered by Decentralized Data

Autonomous Agents in Finance: Finance runs on millisecond-level, fault-free data. Trading bots, robo-advisors, and onchain portfolio AIs therefore pull price, reserve, and rate feeds from decentralized networks rather than a single API. Multi-source oracles such as Chainlink aggregate dozens of exchanges and attach cryptographic proofs, blocking price manipulation that has plagued single-feed DeFi hacks. Portfolio agents query onchain balances and histories directly, gaining real-time transparency and sidestepping bank databases. Permissionless access also widens the data aperture: sentiment from decentralized social platforms or whale-wallet analytics can be folded in for alpha. Because all data is timestamped on a ledger, trades remain audit-ready—a prerequisite for compliance and post-trade review. Onchain asset-management DAOs push autonomy further, letting AI funds execute smart-contract trades around the clock with verifiable, tamper-proof inputs. Net result: faster, more resilient markets and a pipeline for novel financial products.

Content Moderation and Curation: Centralized platforms moderate in opaque silos, raising bias and censorship concerns. A decentralized network flips the model: all posts live on a public ledger or IPFS, so a moderation AI can scan the full global feed, flag violations, and log its actions onchain for anyone to audit. Community feedback loops tune the model, boosting trust. Agents can cross-check claims against public fact registries or incorporate user-supplied tags without relying on a single company’s dataset. The same open data fuels recommendation AIs that aggregate onchain engagement signals while preserving privacy—no one firm profiles users. By recording both rules and training data openly, these systems crack the “black-box” problem and let communities fork or refine moderators at will.

Search and Information Retrieval: Traditional search monopolizes web data behind corporate indexes and throttled APIs. Decentralized crawlers and incentivized indexes aim to publish that corpus openly so any AI can query it. Agents tap multiple trustless sources—academic papers, blockchain records, archived sites—without Google’s permission, and can verify results via onchain proofs or transparent ranking contracts. Storage networks like IPFS or Arweave guarantee permanence, letting agents surface content long after it disappears from the Web2 domain. This “open knowledge layer” erodes data moats, spurring competition in AI search and exposing each answer’s verifiable trail.

Supply-Chain and IoT Automation: A modern supply chain spans sensors, shippers, warehouses, and retailers, all emitting siloed data. Recording those events on a permissionless ledger gives autonomous logistics AIs a single, trusted timeline. Signed IoT readings (temperature, location, humidity) stream onchain; agents reroute cargo or adjust warehouse climate in real time, confident the data is genuine. Participants no longer need reciprocal system access: the ledger is their shared interface. Procurement AIs auto-order when onchain signals show delays; compliance reports and purchase orders are likewise written back for machine-to-machine coordination. Transparent ledgers also expose anomalies, enabling instant counterfeit detection and reducing recalls. The payoff: lower costs, near-just-in-time inventory, and better risk management across global trade.

SQD Network: Best-in-Class Solution for Decentralized Data

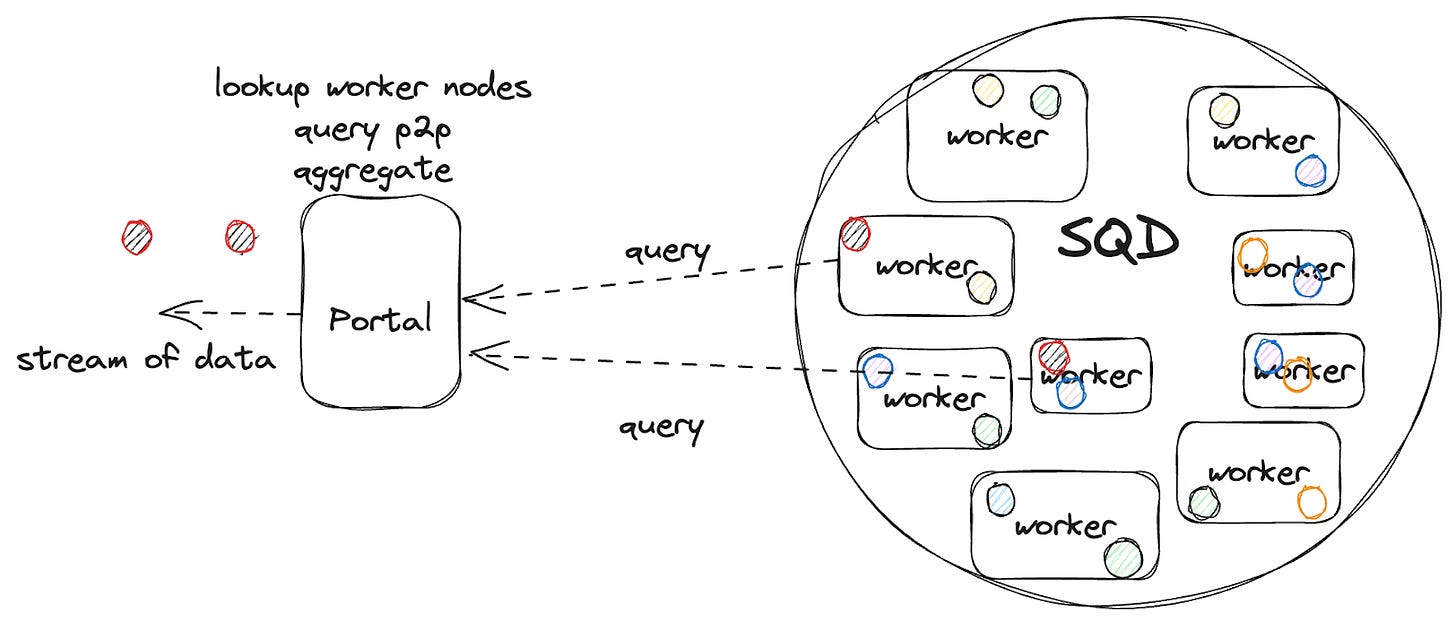

SQD Network has emerged as a leading platform for permissionless, trustless data access, effectively positioning itself as a decentralized data lakehouse for Web3. It blends the strengths of multiple approaches, offering a one-stop infrastructure for ingesting, processing, and querying data across many sources.

Modular, Scalable Architecture: Unlike monolithic indexers, SQD’s architecture is modular at every stage (data ingestion, transformation, storage, query). This design allows it to scale and optimize far beyond competitors. Developers can tailor how data flows through the pipeline, choosing the right tool for each job (e.g., quickly filter data in streaming, then load into a SQL database for heavy aggregation). This flexibility means AI developers aren’t constrained by a one-size-fits-all approach; they can structure data pipelines to suit their model’s needs. The modular design also prevents bottlenecks: one can scale out just the extraction layer or the query layer as needed, maintaining performance even as data volume grows. For AI that might hammer the system with thousands of queries, this means consistent low-latency responses.

Comparison of a monolithic The Graph indexer node (left) vs. the modular SQD architecture (right). The Graph’s node encapsulates GraphQL querying, processing (WASM-based subgraphs), and its own Postgres storage backed by a single blockchain archival node. In contrast, SQD’s design separates concerns: a decentralized SQD Network feeds data into a Squid SDK layer, which can interface with multiple storage backends (like Postgres or BigQuery) and serve different APIs (GraphQL, REST, etc.) simultaneously. This modular approach enables near-zero-cost data access and superior batch processing, as noted in benchmarks, and allows integration of external data sources (IPFS, web APIs, cloud storage) into the pipeline.

Multi-Chain and Heterogeneous Data Support: SQD Network is built for the reality of a multi-chain world. It currently processes historical data from 200+ networks, allowing AI systems to gather knowledge across all ecosystems in one place. For instance, a cross-chain DeFi risk AI could use SQD to pull positions from Ethereum, BSC, and Solana simultaneously. Beyond blockchains, SQD’s roadmap explicitly includes bridging to offchain data sources in a trustless manner. It’s “developing coprocessors and RAG (Retrieval Augmented Generation) features” to connect with offchain data via zero-knowledge proof markets and TEEs. This forward-looking feature is aimed at AI: RAG is a technique where an AI model retrieves relevant snippets of knowledge to augment its context (widely used in GPT-based chatbots to give them up-to-date info). SQD’s plan to facilitate RAG means an AI agent could query SQD’s network not only for onchain events but also for, say, relevant offchain documents or datasets that have been verifiably linked. In essence, SQD Network is becoming a unified data layer that spans both Web3 and Web2 data in a trustless way; exactly what autonomous AI agents will need for holistic understanding.

Performance and Cost Efficiency: From an investor standpoint, a solution that is both technically superior and cost-effective is likely to capture market share. SQD shines here. By using efficient Rust-based indexing and allowing custom data pruning and filtering at the source, it avoids unnecessary bloat. The network has demonstrated up to 100× faster data extraction and 90% cost reduction compared to traditional blockchain data querying approaches. Part of these savings come from not re-indexing irrelevant data (developers can define precisely what to extract) and from batch processing multiple blocks of data together, an area where SQD excels. For AI applications that might sift through years of historical data to find patterns, these performance gains make previously infeasible analyses possible. Additionally, the cost savings (in terms of computing and infrastructure) can be passed on to users, making decentralized data economically competitive with centralized data warehouses.

Developer-Friendly and AI-Ready: SQD provides robust developer tools (the Squid SDK in TypeScript, etc.) and supports GraphQL and SQL querying. This means existing AI engineers and data scientists can easily integrate with the platform using familiar query languages and tooling. The SDK allows building custom “squids” (indexer microservices) which can even be deployed on SQD’s cloud platform for ease. Essentially, it bridges the gap between blockchain data and conventional data analytics. By positioning as the data layer for AI, SQD is aligning its roadmap (like the RAG features) to what AI developers will need. This proactive integration is a competitive edge; other networks might handle raw data, but SQD aims to provide structured, AI-ready information (imagine querying not just raw transactions, but getting time-series aggregates or embeddings of contract activity directly from the network in future).

SQD Network's AI Agent Partnerships and Integrations

“Autonomous” AI stems from the ability to access data without intermediaries, using direct crypto payment rails. The “intelligence” part rests on the ability to reason about the provenance and the quality of the data. These core principles make the design of SQD Network (open p2p infrastructure with the payments and coordination managed onchain), essentially inevitable.

Dima Zhelezov, Co-Founder of SQD Network

SQD is rapidly becoming the data backbone for the decentralized AI agent economy, with integrations spanning agent frameworks (ElizaOS, Symphony, KRAIN), AI compute (io.net), and leading agent networks (Guru, Fetch.AI). These partnerships empower AI agents with real-time, normalized, multi-chain blockchain data, enabling a new wave of automation, analytics, and intelligent agent-based applications across Web3.

1. ElizaOS

Integration:

SQD Network has developed an official plugin for ElizaOS, a popular open-source AI agent framework.

The plugin enables Eliza agents to access and process onchain data (e.g., ERC20 transfers, Uniswap swaps) directly from the SQD decentralized data lake.

How it works:

Providers: Continuously inject up-to-date blockchain data into the agent’s context on every loop, ensuring responses are always data-rich.

Actions: On-demand data extraction and export (JSON, CSV, Parquet) triggered by agent decisions or user prompts.

Leverages SQD SDK 2.0’s “stateless streams” for high-throughput, indexer-free data access.

Use Cases:

Natural language queries for onchain events.

Automated data extraction and export for DeFi analytics, compliance, and research.

Foundation for building more complex, multi-chain, multi-contract, and symbol-based queries.

2. Symphony

Integration:

Symphony, a modular AI agent network, partnered with SQD Network to empower its agents with real-time, multi-chain blockchain data.

Symphony’s agent framework will utilize SQD’s decentralized data streams to enhance agent intelligence and autonomy.

How it works:

Symphony agents can query onchain data (e.g., DeFi events, token transfers) via SQD’s infrastructure, supporting advanced automation and analytics.

The integration is designed to scale with Symphony’s vision of a “Billion Agent World,” enabling agents to make more informed, data-driven decisions.

Use Cases:

DeFi automation, onchain monitoring, cross-chain analytics, and agent-based trading strategies.

Enabling Symphony agents to act as onchain data oracles for other protocols.

3. io.net

Integration:

io.net, a decentralized AI compute network, is collaborating with SQD Network to bring onchain data into its AI compute marketplace and agent ecosystem.

How it works:

SQD provides io.net agents with access to real-time and historical blockchain data, enhancing the capabilities of AI models and compute tasks that require Web3 context.

Use Cases:

AI-powered trading bots, DeFi risk assessment, and real-time analytics.

Enabling io.net’s AI models to ingest onchain data for training, inference, or real-time decision-making.

4. KRAIN AI

Integration:

KRAIN AI, an AI agent network focused on DePIN and Web3 automation, is integrating SQD as a core data provider for its agent infrastructure.

How it works:

KRAIN agents will utilize SQD’s data lake to access normalized, multi-chain blockchain data for automation, monitoring, and analytics.

SQD’s integration is highlighted as a key step in enabling KRAIN’s vision of “AI agents as a service” for the decentralized economy.

Use Cases:

Automated DeFi strategies, cross-chain asset management, and onchain intelligence for DePIN applications.

5. Guru Network

Integration:

Guru Network, a Web3 automation and AI compute layer, leverages SQD’s data infrastructure to power its Flow Orchestrator and AI Processors with reliable, normalized, multi-chain data.

SQD acts as a data backbone for Guru’s business process automation and decentralized AI agent workflows.

Use Cases:

DeFi automation, decentralized social applications, and analytics dashboards.

6. Fetch.AI

Integration:

Fetch.AI’s autonomous agents access structured blockchain data via SQD’s network, enabling advanced automation and analytics across DeFi, NFTs, and RWA tokenization.

Use Cases:

Liquidity monitoring, price surveillance, asset management, and Web3 process automation.

SQDGN: An AI Agent Reimagining Retail Trading (Powered by SQD Network)

SQD’s signature AI partner, SQDGN, is pioneering a new agent-powered model for retail trading, built directly on SQD Network’s data infrastructure. SQDGN leverages SQD’s modular indexing architecture to surface deep, real-time on-chain data, across tokens, wallets, protocols, and flows, that is simply inaccessible through traditional retail interfaces. This data backbone powers a modular RAG pipeline, coupled with an LLM that serves as the agent’s interface. The result is an experience where retail traders are onboarded with transparent, explainable baseline strategies, and where every trade, insight, and recommendation is driven by verifiable on-chain data via SQD.

The relationship is symbiotic: SQDGN serves as a first flagship application demonstrating the power of SQD Network’s infrastructure to fuel new classes of autonomous AI agents. For SQD, it is an early commercial proof point for how its data network can power high-frequency, high-value agent use cases. For SQDGN, SQD provides the raw data foundation and technical differentiation that allows it to move far beyond the shallow heuristics most retail trading bots rely on today.

The opportunity is significant: across the ~600,000 retail traders active in this segment, the goal is to bring the 90% who churn today back into the market, with better outcomes, better transparency, and an experience that compounds user understanding over time. SQDGN is an early signal of where agent-powered applications, and composable AI/data stacks, are headed.

Tokenomics 2.0: Demand for Data = SQD Value Accrual

Tokenomics 2.0, to be formally announced and implemented in the coming weeks, will make the SQD economy market-driven, modular, and multi-chain ready, with onchain revenue and fewer levers for governance to touch.

Here’s what’s changing:

The old “buyback and burn” loop is gone. Rewards are shifting from token emissions to actual user fees.

Data access will now be priced by an open market. If you want to query the network, you either lock SQD or pay a subscription to rent it from SQD holders.

SQD holders will be able to earn yield by fulfilling “Provision Offers”, short-term leases where they lock SQD in exchange for fees.

A Fee Switch mechanism has been built into Portals, off for now, but ready to turn on if needed. Once live, it will apply a small usage fee even to self-staked SQD, ensuring all activity contributes back to the network.

The architecture will support easy Portal deployments across chains like Base and Solana, opening up new user bases and payment options (USDC, SOL).

Bottom-line: Tokenomics 2.0 turns SQD into a real market good, where access to bandwidth and data is priced dynamically, and where supply-side actors (SQD holders, workers) are rewarded based on real demand, not just inflationary emissions.

Decentralized Data: Prerequisite for Next-Gen AI-Driven Services

As AI continues its rapid advancement, one thing has become clear: data is the bedrock on which AI agents stand. The quality, availability, and integrity of that data will determine how far autonomous AI can go. Unliked limited centralized providers, decentralized data networks ensure AI agents have a constant, verifiable feed of information, immune to single-point failures and control by outside interests.

Among the projects racing to supply that foundation, SQD Network stands out for its modular design, multi-chain reach, AI-ready query stack, and real-world performance wins. As regulators demand system transparency, users demand data security & privacy, and models demand ever-larger context windows, the combination of AI intelligence atop blockchain truth will define the next decade of software.

Decentralized data infrastructure isn’t just a technical novelty; it’s a prerequisite for the next generation of AI-driven services across finance, media, supply chains, and beyond.

About M31 Capital

M31 Capital is a global investment firm dedicated to crypto assets and blockchain technologies that support individual sovereignty.

Website: https://www.m31.capital/

Twitter: https://twitter.com/M31Capital